Test-Driven Development is one of those practices that, despite having been in the minds of developers for a very long time, is not widely used. I know many people who, when interviewed, agree with all the benefits that TDD brings, and yet, these same people often do not practice TDD claiming that "it doesn't work in my project."

Most often, however, the problem is not with the specific project. It usually stems from a lack of experience and skills in using TDD. One of the common mistakes made by people starting out in Test-Driven Development is the desire to use this technique to implement every new class or method. In this article, I will explain why this is a bad idea and what you can do to protect yourself from this mistake. If you want to learn more about this topic, also check out the Masterclass Clean Architecture course.

What is Test-Driven Development?

Test-Driven Development is a technique that helps create production code that is easy to use and has no redundant implementation. It is important to remember that TDD is not a technique designed to create good tests (although this is certainly a positive side effect). Tests are a tool to help write better production code.

The whole idea of TDD is to break the code addition cycle into three phases:

- Red - we create a test that runs, but doesn't pass.

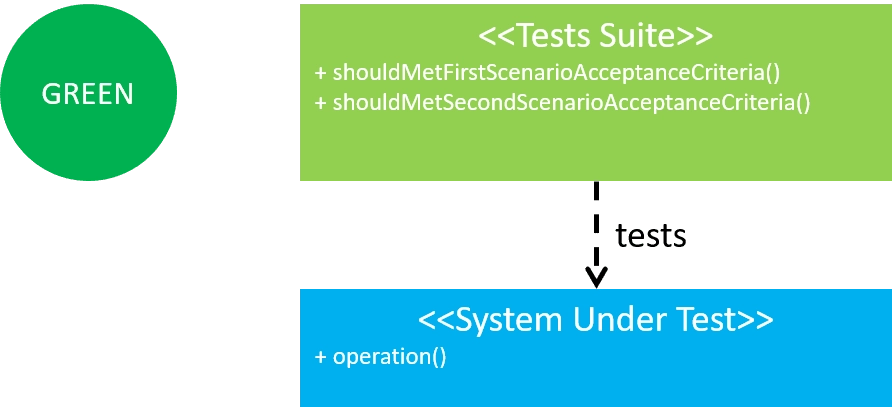

- Green - we add code that makes the test firing successful.

- Refactoring - we improve the quality of the written code.

How does a problem arise?

Let's look at an example of using Test-Driven Development:

- We start by creating the first test scenario that we run, which does not pass:

- Then we add code that meets the requirements of the first test:

- The next step is to refactor the tests and/or the production code if needed:

- We add another test scenario:

- We add code that meets the new requirements:

- Another refactoring:

As you can see, during the refactoring we separated two dependencies from our SystemUnderTests (SUT) class. This is the point at which many developers make a mistake - they start adding tests to our newly created dependencies. What's more, it's common for them to try to do this using Test-Driven Development.

This behavior often causes developers to abandon TDD because of the excessive cost and burden it brings with it. And frankly, I might agree with this if it weren't for the fact that ... it has nothing to do with Test-Driven Development.

Why is this a bad thing?

At the outset, I'll say again that adding tests to newly separated dependencies is in no way related to TDD. That's not how the technique works. But why is making this break so problematic?

-

The test scenarios we add in Red steps are intended to implement functionality that implements the new requirements. This is the main reason why the SUT is created at all and developed during subsequent cycles. If we start writing tests to dependencies that were created through refactoring and start developing them using TDD, we may find that we add functionality there that only seems useful later.

-

By adding tests to each dependency, we have to detach ourselves from adding more test scenarios aimed at developing the main functionality (SUT), and thus we slow down the very process of fulfilling those requirements.

-

In subsequent cycles, as we add more scenarios and modify the SUT implementation, we may come to the conclusion that the earlier refactorings (separating Dependency1 and Dependency2 classes) are not needed after all, and can be gotten rid of or modified. Until the SUT implementation is finished, the structure of the classes can change many times. If this happens, the effort put into dependency testing will be wasted.

How to do it right?

- Focus on the development of the SUT - the functionality to be delivered with the SUT is our main task.

- Don't waste your time tweaking and developing dependencies that can still change.

- Don't waste your time testing dependencies that may disappear as soon as you add another test scenario.

- Don't stop developing the SUT until you are able to add another test that doesn't pass. Only when you don't find such a test can you focus on developing and testing the separated dependencies.

Therefore, our next step should not be to add tests to Dependency1 and Dependency2 just to add another test scenario of our functionality (SUT):

TDD is not the end

Of course, this does not mean that the tests of Dependency1 and Dependency2 classes will not be added. The truth is that after TDD is over, while you may not be able to add any new scenario that doesn't pass, you may still see places/scenarios that are worth additional verification:

- You may want to add tests to dependencies that were created during refactoring.

- Sometimes there is a need to add tests where the inputs are more complex and complicated than those used during the TDD steps.

Summary

Test-Driven Development is a technique for developing production code, not writing tests. This means that after TDD is over, there may still be elements of code that you will want to verify. It's also important to remember that we use this technique most often when adding new functionality, and it's the shape and implementation of that functionality (regardless of how many elements it consists of) that TDD helps us take care of. It is the functionality, not each individual class, where the use of Test-Driven Development brings us more benefit than it costs in effort.

Want to expand your knowledge?

Check out the Masterclass Clean Architecture course. The course discusses the use of best practices related to architecture, software quality and software maintenance. During the course you will learn the theory, the most common problems, and the practical application of patterns/practices/techniques such as hexagonal architecture, CQRS, test-driven development, domain-driven design, and many others. The course is NOT intended to cover each technique comprehensively, but to show their practical application in everyday application development.